Hey there! I'm a Computer Science major at Princeton University minoring in Physics and Statistics & Machine Learning. I'm interested in and have experience with machine learning, econometrics, autonomous vehicles, web development, and quantum computing. I love learning about new technologies in my free time, as well as running, rock climbing, and investing in the stock market. Check out my projects below, and find more information about me by clicking the links in the navbar. Feel free to drop me a line!

Skills

General Purpose

| Python |

|

| C |

|

| C++ |

|

| Java |

|

Stats and ML

| scikit-learn |

|

| PyTorch |

|

| StatsModels |

|

| TensorFlow |

|

Web Development

| Javascript/ES6 |

|

| Django |

|

| Flask |

|

| React+Redux |

|

| Jekyll |

|

Other

| Git |

|

| Linux |

|

| ANTLR |

|

| ROS |

|

| MPI, OpenMP |

|

Projects

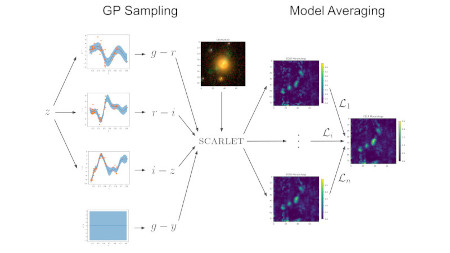

Model-based search for extended emission-line regions.

The recently developed method SCARLET by Melchior et al. (2018) allows for the morphologies and spectra of individual astronomical sources to be extracted from large data sets even when they are partially or completely overlapping. In this work, we search for fluorescent emissions of gas known as extended emission-line regions (EELRs), which are energized by active galactic nuclei (AGN) at the centers of galaxies. EELRs have so far rarely been observed cleanly because their host galaxies tend to dominate the emission, but SCARLET is designed to separate multiple sources of emission. We develop an approach to extract EELRs from multi-band images without the need for targeted spectroscopic measurements. Our approach uses Gaussian Process regression to generate samples of likely EELR spectra, and then computes a likelihood-weighted model average of each EELR’s morphology and spectrum as obtained from SCARLET. This model-based search approach is especially useful for finding known or suspected physical processes in the growing data volumes of future large astronomical surveys.

Paper, Poster, GitHub

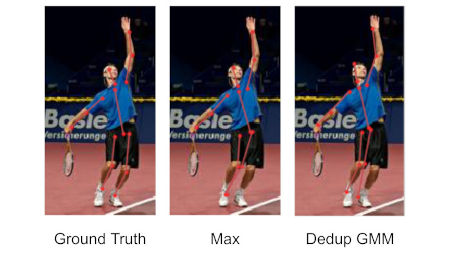

Translating 2D pose heatmaps to continuous keypoints.

In this work, we tackle the problem of 2D human pose estimation. We compare three approaches. The baseline approach is a deconvolutional head model (Xiao et al. 2018). Our second approach, which takes inspiration from Sun et al.'s (2018) integral regression approach, is a heatmap regression network. Finally, our third approach appends a "deduplication" step to the deconvolutional head model by using Gaussian Mixture Models to "deduplicate" joints that are predicted to be at the same location by the network.

Paper, Colab Notebook

A motivational mood tracker powered by AI.

Pilot strives to boost mood more effectively than current trackers by actively listening and responding, and by introducing people to unique places and cultures around the world. Users are prompted to discuss the events of the day out loud, though they can enter text if they wish -- this style of information is more efficient and natural than the process required by most mood trackers, and encourages comprehensiveness and candor. Pilot then responds to the individual's sentiment and prompts them to reflect on their current mood further with a probing question. Once the user has responded to these questions, Pilot recommends how the user may be feeling on three different scales, mood, anxiety, and cynicism, allowing the user to adjust as they see fit. Our website won the "Rev.ai Speech API Challenge" and the "DRW: Best Data Visualization Hack" awards at HackMIT 2019.

Devpost, GitHub

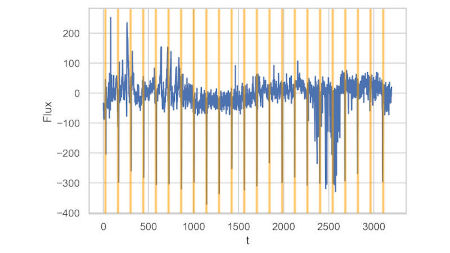

Detecting exoplanets with machine learning.

To train and evaluate exoplanet detection models, we used data from the Kepler space telescope, which includes flux (light intensity) measurements from several thousand stars over time, as well as a binary variable indicating whether each of them has one or more exoplanets. Our first analysis uses a standard exoplanet detection technique called box least squares. In our second analysis, we construct several features from each time series and run these features through several "classic" (i.e. not deep learning) machine learning classifiers. In our last analysis, we explore two deep learning methods.

Paper

Supporting opioid addicts through human connection.

Core seeks to provide individuals looking to overcome opiate and other drug addictions with strong and supportive connections with volunteer mentors. At the same time, it fills another need -- many college students, retirees, and moderately unfulfilled professionals are looking for a way to help bring about positive change without wasting time on transportation or prep. Core brings a human-centered, meaningful volunteer experience opportunity directly to people in the form of an app. Our website won the "Best Use of Facebook API" at HackMIT 2018.

Devpost, GitHub

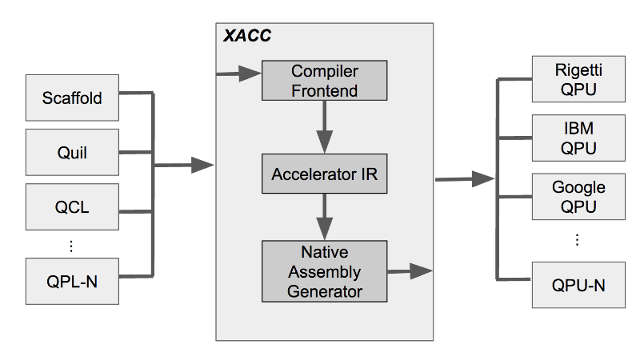

Compiling quantum languages into the XACC intermediate representation.

Eclipse XACC is a framework that allows programmers to write their code in one quantum language and then execute this code on various quantum computing backends. E.g., they might write code in the Quil quantum language and then, using XACC, execute this on the IBM Quantum Experience backend. During my internship at Oak Ridge National Laboratory, I used ANTLR and C++ to develop robust compilers that parse and translate the IBM OpenQASM, Rigetti Quil, and ProjectQ quantum languages into the XACC intermediate representation.

OpenQASM Compiler

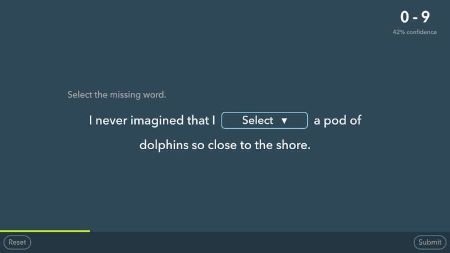

An engaging, adaptive diagnostic test.

I designed and developed an adaptive diagnostic test (both the UI and the adaptive algorithm) using React and Redux for PrepFactory, an EdTech startup. The diagnostic has many different interactive and modular question types to make it more engaging. By adapting to the user, the diagnostic quickly determines an estimated score range and confidence level for a student's performance on the ACT.

Diagnostic

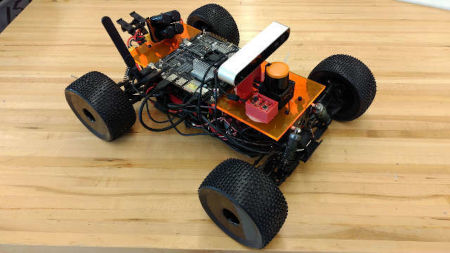

An exploratory mini autonomous vehicle.

A friend and I built and programmed a 1/8th-scale car to drive autonomously to a specified location by using and updating a 2D map using ROS. We mounted a laser range-finder and an inertial measurement unit on the car, and used an Nvidia Jetson TX1 for computation. The novel component of our project was trying to intelligently couple the Adaptive Monte Carlo Localization (AMCL) and Gmapping simultaneous localization and mapping (SLAM) algorithms. This way, the car dynamically determines the optimal path through both known and unknown areas.

Paper, GitHub

An agile, autonomous robot.

As the lead programmer, I designed a command-based software architecture with distinct, interoperable subsystems. Using this structured architecture, all our code is clean and extensible. I programmed the robot in Python to drive to a loading position autonomously and to self-correct using a feed-forward proportional-integral-derivative controller, using OpenCV for computer vision. I also developed a dashboard with Electron that displays useful information about the robot, including a sonar display. Our team won the Innovation in Control Award.

GitHub

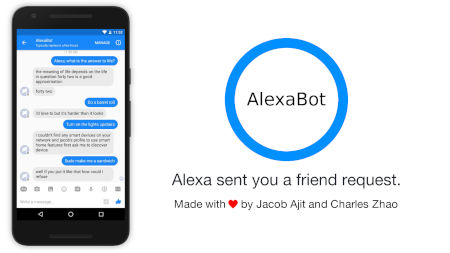

Talk to Amazon Alexa through Facebook Messenger.

AlexaBot is a Facebook Messenger bot that lets a user interact with Amazon Alexa remotely rather than through an Echo and through text rather than speech. A friend and I wrote the bot in Python using Tornado while the Messenger Platform was in beta, and we combined a speech recognition API with a text-to-speech API to communicate with the Alexa Web Service. Our bot has had more than 2000 users, and we ensured reliable uptime and quick bug-fixing during its increase in popularity.

GitHub

My first experience with full-stack development.

I used Django (for educational purposes) and Materialize to develop my old résumé website. It is hosted on an Ubuntu server I set up at home with Gunicorn, Nginx, and Supervisor, and most of the content is dynamically generated from a PostgreSQL database. Using Django allows me to easily add, remove, and edit items through an administrative interface. I also implemented full SSL encryption using Let's Encrypt and CloudFlare. This portfolio website uses a similar architecture.

Website

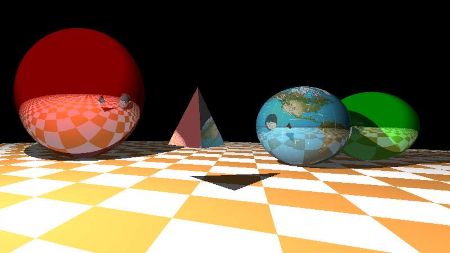

Rendering 3D objects in parallel.

Using C, OpenGL, and OpenMP, my program can render various 3D objects in parallel and allows the user to move around the scene. The program can render spheres and triangles (being able to render triangles allows the program to render essentially any 3D object), shadows and lighting, recursive reflection, and animation.

Using Hough transforms and k-means clustering to detect shapes.

Using vanilla Python (no libraries like OpenCV), this programs detects circles and lines in an image. It uses Hough transforms with k-means clustering to determine the centers and radii of circles, and the positions and orientations of lines. The program converts the image to grayscale, then performs a Gaussian blur, then performs Canny edge detection, and finally performs Hough transforms with k-means clustering. In the image thumbnail, the program detected the car's wheels (marked in green).

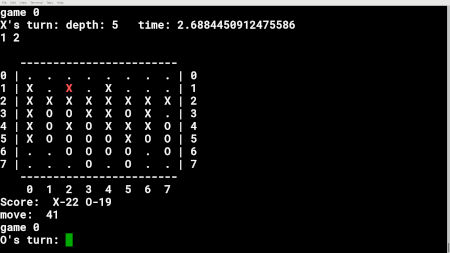

A (really good) Othello player.

Othello, also known as Reversi, is a board game. I implemented Principle Variation Search (a.k.a. NegaScout), which is a minimax algorithm that improves upon alpha-beta pruning, and I based the heuristics on Othello strategy. I created a program to determine optimal weights for the heuristics by playing programs with different weights against each other.

Changelog

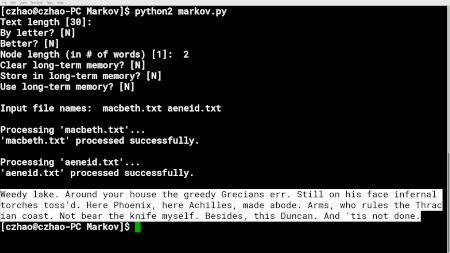

Generates sentences from a given text.

This Python program takes in text files and produces text using a Markov chain generated from those text files. This program has several options for text generation, including the level and order of each n-gram. This program also gives the option of storing data in “long-term memory,” which is a JSON file, so that the program can learn over time by updating the long-term memory Markov chain. For example, given the full texts of the Aeneid and Macbeth, my program generated the following: "I a hundred mouths, a hundred mouths, a hundred tongues. And bridges laid above to join their hands; a secret of her hair is crown'd."